Webhooks the Right Way™

If you’re a developer, dealing with webhooks is a part of your life. Nowadays almost every subscription service allows for these user-defined callbacks. For example, when a Lead is added to Salesforce, you may want to have a task that runs in the background to generate more information about the company they work for. Maybe you want to receive a request from Stripe when a customers payment fails so you can send them dunning emails? You get the drift.

The most common way to deal with webhooks is adding an endpoint to your application that handles the request and response. There are some benefits to this. No external dependencies by having all your code in one place for example. However, the cons usually outweigh the pros.

Common problems handling Webhooks

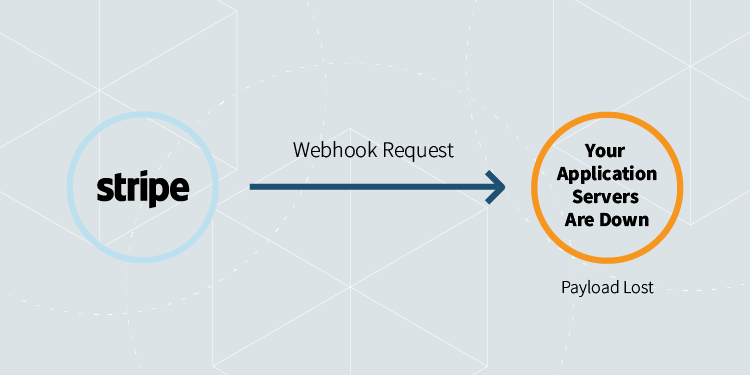

Application downtime

If your application is down, or in maintenance mode, you won’t be able to accept webhooks. Most external services will have retries built in but there are many that don’t. You’d need to be OK with missing data sent from these services.

Request queuing

What happens if you have a ton of webhooks from a bunch of different services all coming in at once? Your application/reverse proxy/etc will probably end up queuing up the requests along with other customer requests. If your application is customer facing, this could result in a degraded user experience or even full-blown timeouts.

Too many requests to your frontend could result in request queuing and negatively affect the end user experience

Thundering herds and Cache stampedes

Even if you’re able to process all of the webhooks coming in at once, your system is going to feel the effects one way or another. This could result in unwanted resource spikes (CPU/MEM/IO). Unless you’re set up to autoscale, bad things could happen to your infrastructure.

At Iron, many of our customers get around these issues by using IronMQ and IronWorker in conjunction. Since IronMQ is HTTP based, highly available, and built to handle thousands of requests a second, it’s a perfect candidate for receiving webhooks. One of the great things about IronMQ is that it supports push queues. When a message is received, it can push its payload to an external HTTP endpoint, to another queue, or even to IronWorker.

IronWorker is a container based enterprise-ready background job processing system that can autoscale up and down transparently. We have customers processing 100’s of jobs concurrently one minute, while the next minute the number is up in the 100’s of thousands.

The beauty of the IronMQ and IronWorker integration is that IronMQ can push its payloads directly to IronWorker. Your work is then picked up and worked on immediately (or at a specific date and time if required). You can have a suite of different workers firing off for different types of webhooks and handling this process transparently. This is great for a number of reasons.

Handling Webhooks the Right Way

Happy application

Now your application is never involved in the process of handling webhooks. This all happens outside of your normal application lifecycle. Your application machines will never have to deal with the excessive load that could deal with infrastructure issues.

Happy users

All the work you need to do to process webhooks now happens in the background and on hardware that your users aren’t interacting with. This ensures that processing your webhooks won’t affect your user experience.

This is a pattern that our customers are pretty happy with, and we’re constantly improving both IronMQ and IronWorker to handle their additional needs. For example, being able to programmatically validate external API signatures and the ability to respond with custom response codes are on our list. That said, similar to microservices, this level of service abstraction can also introduce its own complexities. For example, dependency and access management come to mind. We’ve had long conversations about these topics with our customers and in almost all cases, the pro’s out-weigh the cons. This approach has been a success and we’re seeing it implemented more and more.

If you have any questions or want to get started with the pattern above, contact us and we’ll be happy to help.

[salesforce form=”1″]